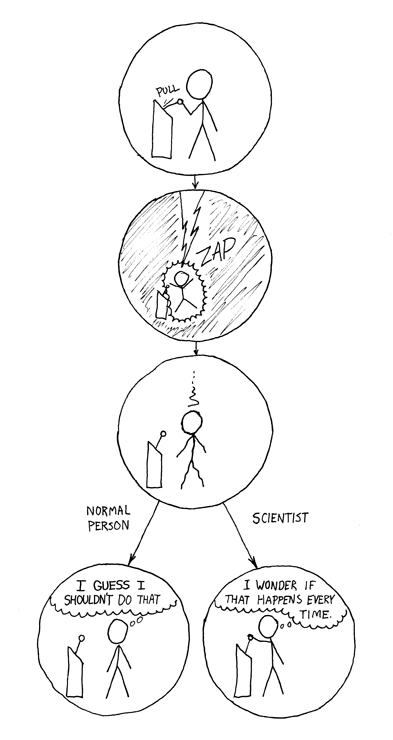

(One of my favorite xkcd cartoons. According to recent research,

maybe we're all scientists? At least, we keep pulling the lever over and

over again...)

Do people hate being alone with their thoughts so much that they will shock themselves to avoid thinking? In a series of studies, Wilson et al. (2014) asked people to spend time quietly thinking without distractions and concluded that people generally found the experience aversive. Others have reinterpreted this conclusion, but the general upshot is that people at least didn't find it particularly pleasurable. Several manipulations (e.g. planning the thing you were going to think about) also didn't make the experience much better. Overall this is a very interesting paper, and I really appreciated the emphasis on a behavior – mind wandering – that has received much more attention in the neuroscience literature than in psychology.

The part of the paper that got the most attention, however, was a study that measured whether people would give themselves an electric shock while they were supposed to be quietly thinking. The authors shocked the participants once and then checked to make sure that the participants found it sufficiently aversive to say they would pay money to avoid having it done to them again. But then when the participants were left to think by themselves, around two thirds of men and a quarter of women went and shocked themselves again, often several times. The authors interpret this finding as follows:

What is striking is that simply being alone with their own thoughts... was apparently so aversive that it drove many participants to self-administer an electric shock that they had earlier said they would pay to avoid.Something feels wrong about this interpretation as it stands. Here are my two conflicting intuitions. First, I actually like being alone with my own thoughts. I sometimes consciously create time to space out and think about a particular topic, or about nothing at all. Second, I am absolutely certain I would have shocked myself.

I would have shocked myself at least once, but possibly five or more times. I might even have been the guy who shocked himself 190 times and had to get excluded from the study. Even when I said I would pay money to avoid having someone else shock me. I definitely would have done it to myself. Why? I don't really know.

There are many sensations that I would pay money not to have someone do to me: stick a paperclip under my fingernail, pluck out hairs from my beard, bite my nail to the quick. Yet I will sometimes do these things to myself, even though they are painful and I will regret it afterwards. The exploration of small, moderately painful stimuli is something that I do on a regular basis. (Other people do these things too). I am not sure why I do them, but I don't think it's because I hate being bored so much that I would rather be in pain.

Boredom and pain are not zero sum, in other words. Pain can drive boredom away, but the two can coexist as well. I don't do these things when I'm engaged in something else like reading the internet on my phone. But I do actually do them on a regular basis when I'm listening to a talk or thinking about a complicated paper.

I don't know why I cause myself minor pain sometimes. But it feels like there are at least two component reasons. One is some kind of automatic exploration (they happen when my mind is otherwise occupied, as the examples above show). But I also do these sorts of things in part because I want to see how they feel. Kind of like ripping off a hangnail or playing with a sharp knife. There's some novelty seeking involved, but doing them again and again isn't quite about novelty seeking; we've all had a hangnail or pulled out a hair. Perhaps it's about the exact sensation and the predictions we make – will it feel better or worse? Can I predict exactly what it will be like?

What I'm arguing is that these things are mysterious on any view of humans as rational agents. The Wilson paper doesn't sufficiently acknowledge this mystery, instead choosing to treat people as purely rational: they paid to avoid X, but then they do it anyway, it must be because X is better than Y. But there isn't a direct, utility-theoretic tradeoff between mind-wandering and electric shock. Consider if Wilson et al. had played Enya to participants and found they shocked themselves (which I bet I would have). Would they then conclude that Enya is so bad that people shock themselves to get away from her?